Real-Time client updates without the overhead

What options do we have implementing polling integrations on AWS?

Real-time updates are essential for many web applications, from chat rooms to real-time data visualization. A traditional technique for achieving real-time updates is called "polling". In this article, I will give a brief overview of two essential client-initiated techniques called "short-" and "long-polling". You will learn the differences between both approaches and how they influence the underlying implementation using various AWS services.

Polling in several flavors

Short-Polling

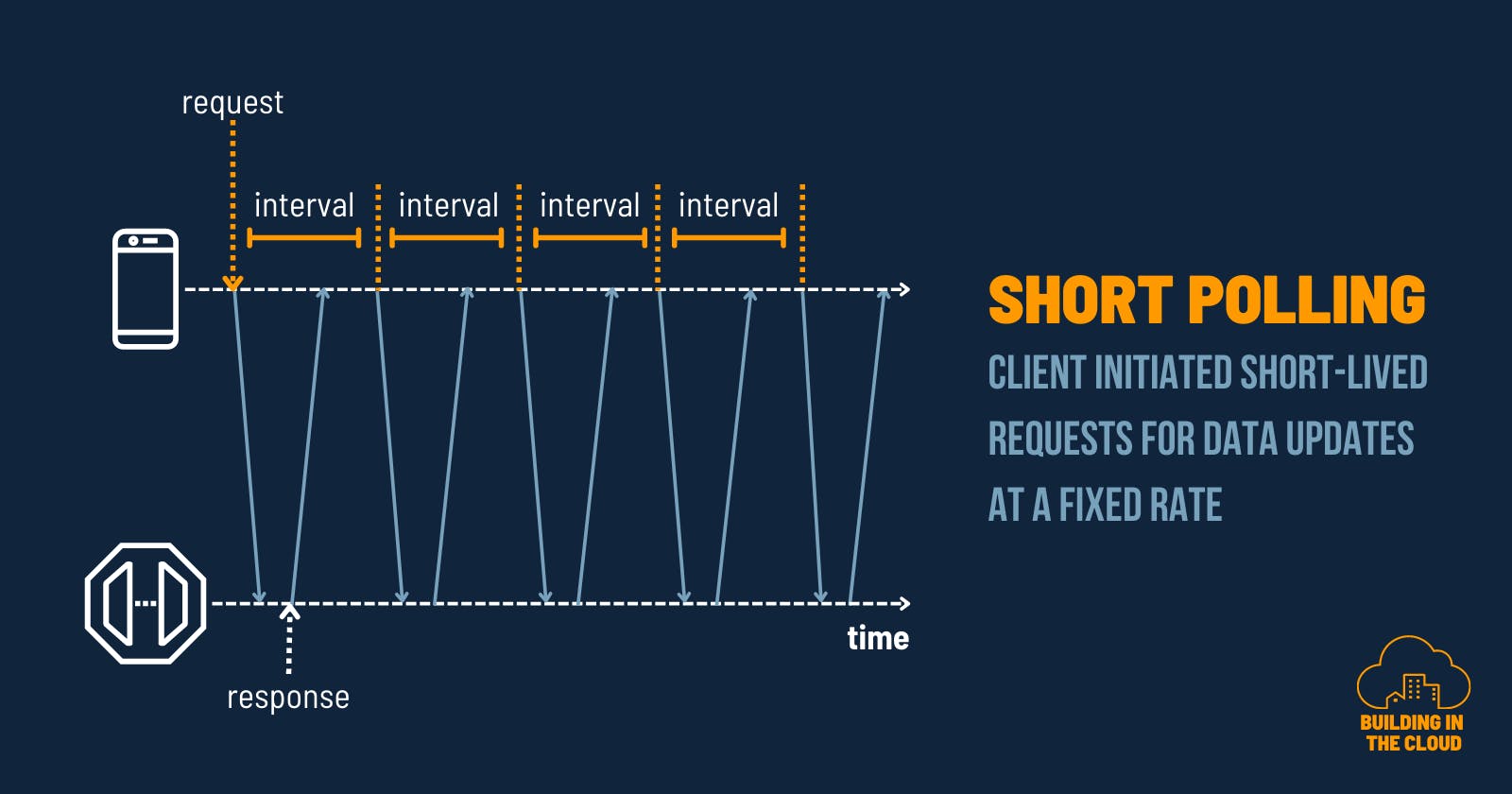

Polling (also named Short-Polling or Ajax-Polling) is like being on a road trip with your kids when they ask every minute "Are we there?" Clients (kids) send regular requests to the server (dad) to check for updates. My kids determine the interval on when to ask for updates. Typically this interval is a fixed rate for example every X seconds. Sometimes both kids and clients might get crazy by further decreasing the interval of requests while increasing the stress level for the server or me as a dad. 🤯

Browser capabilities that enable sending HTTP requests using client-side javascript offered a whole new world of options during the early days of Web 2.0. Polling was one of the things that were possible since then. It impresses with its simplicity of implementation while it is not that efficient. It has some important things to think about when building such integrations - even using modern cloud platforms.

When working for a german broadcasting station, we implemented polling in a companion voting app for a live show on TV. The polling target was a configuration file hosted on Amazon S3. Some backend processes updated the configuration file once a new voting was activated. This happened regularly every 5 minutes - the client requested the configuration every 3 seconds. The majority of the time, the client fetched data he already knows. Not that efficient. Imagine you set up polling towards a good old Apache Tomcat Server where every request is bound to a running thread. More clients combined with short request intervals can make your data center burn.

Due to its constant request intervals, polling increases the risk of increased server load and latencies. Finding the right interval settings and right integrations is key to providing a good user experience. Bear in mind, that if your request interval is shorter than the average response time from the server, your client will request faster than the server can respond. This will likely end up in a mess.

Long-Polling

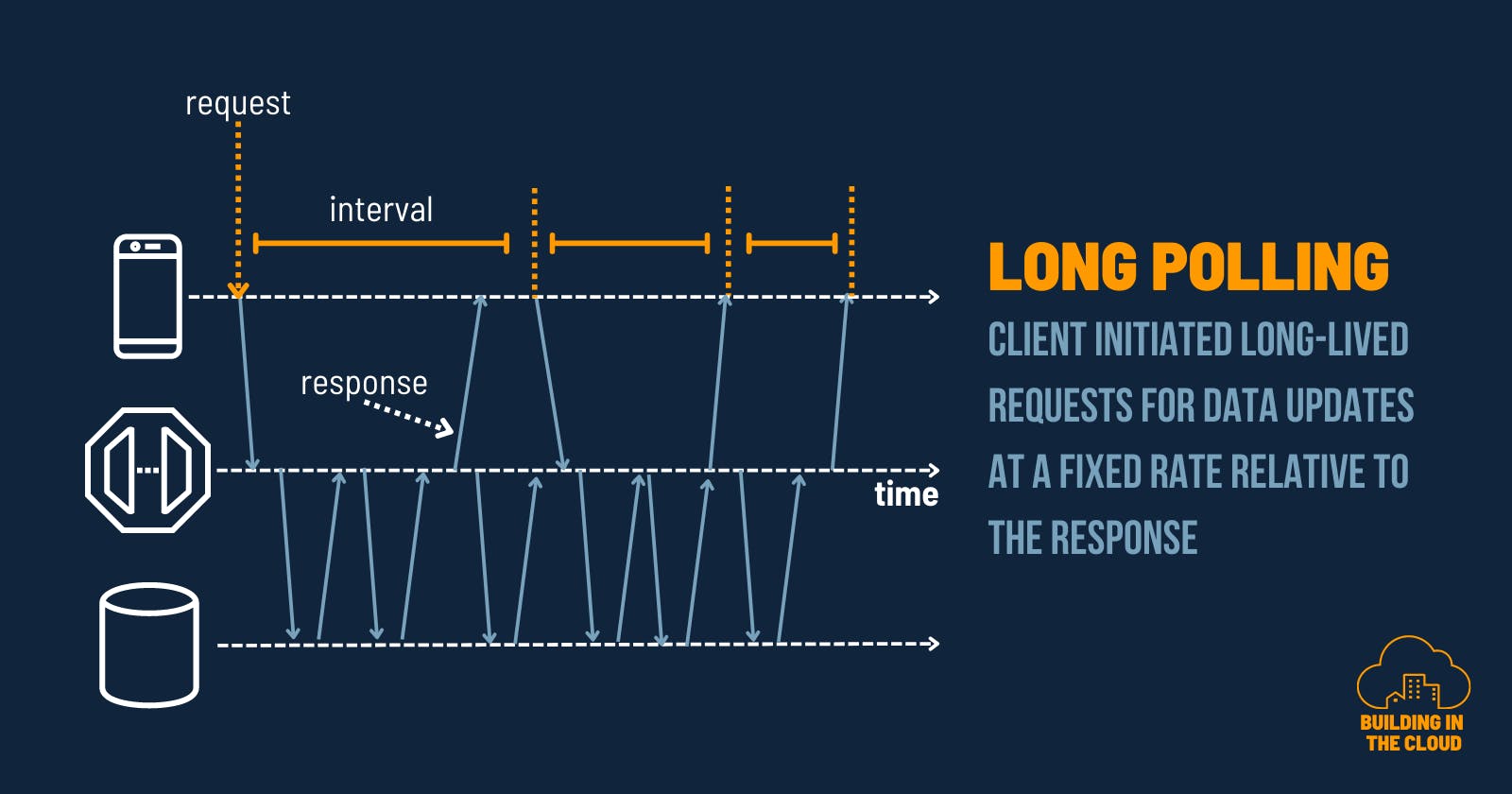

Besides the traditional short- or ajax- polling there is an option of so-called "long-polling". Another interesting approach on how to provide your client applications with data updates.

Picking up the analogy of a road trip with my family, long polling would be my kids (client) asking me (server) regularly about "Are we there?" but more patiently (god, how cool would that be 😇). My kids hit me with the question but waiting in silence while I am checking my navigation system for updates.

What makes this kind of polling a "long-polling" is not necessarily that the client increases the interval of requests to multiple seconds or even minutes. It is more about the client being able to keep a TCP connection open and waiting for a longer timeframe for a response from a server. On the server side, this means, that the TCP connection is kept open until either the server runs in a timeout or a data change was recognized so that we can respond to the clients. There are resources blocked on both sides of the perimeter waiting for a signal to close the connection. This signal can be a captured data change or a technical timeout. Long-Polling has a contract that both sides have to agree on otherwise this will end up in a huge mess if your client assumes it is a short-polling 🤣

Keeping the connection open between the client and server is the key characteristic of long-polling. This reduces the chattiness but has some implications for the underlying implementation as we need something capable of keeping a connection to the client open for a longer time. Typically the modern web is optimized to finish a request-response cycle as fast as possible. It also requires that the server has to manage those open connections at any expected scale.

Resources are finite so are threads on my good old Apache Tomcat server. Using appropriate timeouts and error handling is important to improve reliability. But it can be an effective technique by reducing server load and network overhead.

Implementation considerations

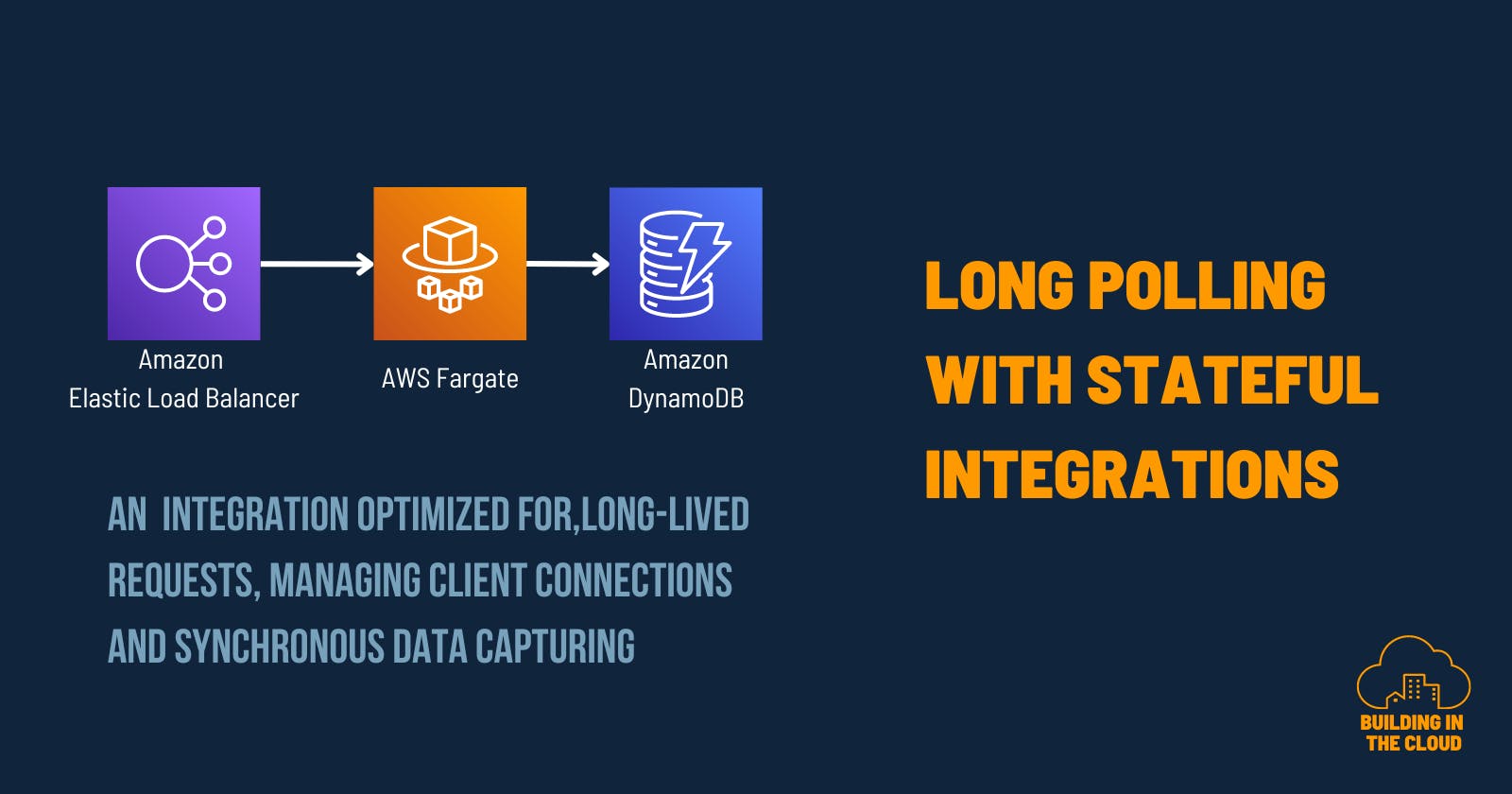

Nowadays we have options to use managed services for your APIs like Amazon API Gateway or AWS AppSync. An excellent starting point for polling integrations. To reduce latency and improve scaling I would recommend using direct-service integrations whenever possible. For example by directly reading data from an Amazon DynamoDB table without any kind of additional compute layer in between. Backend processes can implement data-capturing scenarios to update data in your database that will then be fetched with upcoming client requests. Whenever you put some compute services behind your API, you have to ensure these additional layer scales.

For long-polling integrations, we need something that can keep a TCP connection open while regularly checking for updates in the back. This makes it hard for direct service integrations in our Amazon API Gateway or AWS Appsync API. In this case, we need some compute layer between the API and the database that can maintain TCP connections. In this case, I would go with something containerized like for example AWS Fargate that integrates with a database like Amazon DynamoDB to watch for data changes. In some situations, I might also challenge using an Amazon API Gateway by connecting my clients via an Application-Load-Balancer directly with my backend service. It depends a bit, on what kind of features from the managed API service you need in your use case.

Conclusion

Real-time updates are essential for many web applications. Two popular techniques for achieving these updates are short-polling and long-polling. Both have in common, that the client initiates the process of getting updates by regularly asking the server for fresh data.

Short-polling is an efficient but potentially risky technique if clients are getting crazy and out of control. Long-polling might be a more reliable technique but requires some additional implementation considerations on managing the lifecycle of the client connections on the server. With careful consideration of the advantages and disadvantages of each technique, developers can choose the best approach for their real-time update needs. Good observability is key to keeping control of your polling-based integrations.